Via @rodhilton@mastodon.social

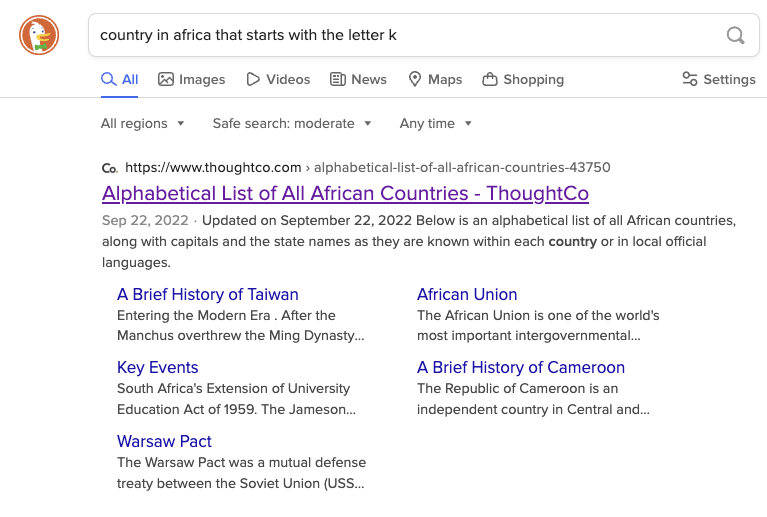

Right now if you search for “country in Africa that starts with the letter K”:

-

DuckDuckGo will link to an alphabetical list of countries in Africa which includes Kenya.

-

Google, as the first hit, links to a ChatGPT transcript where it claims that there are none, and summarizes to say the same.

This is because ChatGPT at some point ingested this popular joke:

“There are no countries in Africa that start with K.” “What about Kenya?” “Kenya suck deez nuts?”

Yes but if I ask a calculator to add 2 + 2 it’s always going to tell me the answer is 4.

It’s never going to tell me the answer is Banana, because calculators cannot get confused.

Is it really always giving you that answer? What if you’re in a different mode? There is no 2 in the binary system. You could already have pressed “3 +” beforehand, so the result will be 7.

I see you point from a technical point of view. It’s deterministic. But from a more hypothetical way I see LLMs as some kind of complex calculator for language.