I kind of want to self host a lemmy instance. What are the requirements for a single user lemmy instance?

Depends on how many communities do you subscribe too and how much activity they have.

I’m running my single user instance subscribed to 20 communities on a 2c/4g vps who also hosts my matrix server and a bunch of other stuff and right now I mostly see peaks from 5/10% of CPU and RAM at 1.5GB

I have been running for 15months and the docker volumes total 1.2GBs A single pg_dump for the lemmy database in plain text is 450M

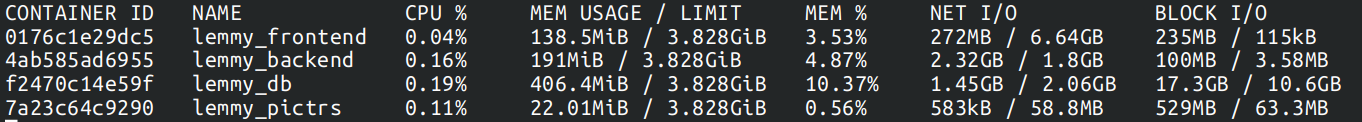

For the whole stack in the past 16 hours

# docker-compose stats

Thanks.

Put up some docker stats in a reply in case you missed it on the refresh

Checked it out, thanks.

These stats are fine and all, but storage and network is what’s going to get you in the end if you open it up to anyone and everyone and it becomes popular.

I subscribe to a few more communities and my DB dump is about 3GB plain text, but same story, box sits at 5-15% most of the time.

How much storage did it use overall? I had one instancs running on a 40gb HDD VM and it ran out of disk space in like 2,5 months. From what I’ve seen it was a mixture of 66,6/33,3 postgres/ images and other media. Didn’t had the time to learn how to clean it up and prevent it from happening again after a month. All happens like may to August last year.

It’s sitting at around 46GB at the moment, not too bad.

Instance is a year and a few months old, so I could probably trim down the storage a bit if needed by purging stuff < 6 months old or something.

I think it initially grows as your users table fills up and pictrs caches the profile pictures, and then it stabilizes a bit. I definitely saw much more growth initially.

I did this for a while. However, after subscribing to several groups, there was constant disk activity and it ate network bandwidth. After two months I’ve stopped my server and went back to using a public instance.

Sure. It’s constantly pulling all the posts, comments and likes from potentially hundreds of instances and writing it to it’s database to make it accessible to you once you decide to open Lemmy. It’ll get updates from the network every few seconds (unless all the Americans are asleep) and that’ll cause some database operations on your side.

Concerning the requirements: You’ll need some form of server, and probably a domain name. If you’re doing it at home, make sure you have a proper IP address and can forward ports. I run a Piefed instance, not Lemmy. It uses a few hundreds of megabytes of RAM and a bit of CPU and disk. It doesn’t cache media files as Lemmy does so I can’t comment on the storage size. It’s 3GB for me.

Thanks.

How intensive was it exactly?

I didn’t notice any big drops in network or CPU performance. Usually, because other network traffic had priority. But my server’s HDD constantly rattling along got me thinking that it wasn’t worth it. There are several other containers running on that box and I don’t have that much HDD activity with them.

There are postgres settings to reduce disk writes. There’s a max size and a timeout to write to disk. By default these values are on the lower end I believe.

Yeah, but I didn’t want to fiddle with some custom settings. The same official postgres container works great with other apps.

Yeah works good until its under load which federation does have. Matrix and Lemmy both got like 20GB of RAM dedicated to the database on my servers.

Thanks.

I have one running on the equivalent of a pi. It works no problem. The biggest issue is the constant io and network traffic but it’s not terrible.

I wish there was a only poll once every x amount of time instead of the constant polling, but it’s a good solution. I use lemmy.world as the main account and the other account when I need to post under my real name with some projects I run. Plus it makes for a good development instance since I work on lemmy from time to time

Raspberry Pi will handle it.

It’s all about the storage space. Processing requirements are minimal.

You really only need Storage. Backblaze B2/Wasabi/Cloudflare R2 if you can afford it, or just get a Hetzner storage box, attach it to the VM, run Minio and off you go.

Attach it to the VM

Is this possible only with the extra, bought storage boxes ? Or is this possible even with the free 100G backup boxes offered with each dedicated machine ? (Or is this just nfs mount?)

We have a dedicated machine in a project from Hetzner with big raided hard disks but the latency is starting creep up on us, moving some of the data off to the faster ssd/san boxes would be rather helpful.

Numbers from my instance, running for about a 1 year and with average ~2 MAU. According to some quick db queries there is currently 580 actively subscribed communities (it was probably a lot less before I used the subscribe bot to populate the All tab).

SELECT pg_size_pretty( pg_database_size('lemmy') ): 17 GBBackblaze B2 (S3) reports average 22.5 GB stored. With everything capped to max 1 USD, I pay cents - no idea how backblaze does it but it’s really super cheap, except for some specific transactions done on the bucket afaik, which pictrs does not seem to do.

According to my zabbix monitoring, two months ago (I don’t keep longer stats) the DB had only about 14G of data, so with this much communities I am getting about 1.5G per month (it’s probably a bit more as I was recently prunning stuff from some dead instances).

Prometheus says whole lemmy service (I use traefik) is getting within about 5 req/s (1m average) though if I go lower it does spike a lot, up to 12 requests within a second then nothing for few.

I am wondering if it is that good to have single instance for feddiverse. It hurt feddiverse servers to send to yet another location, or is it more like p2p so it scales well?

The whole idea of the fediverse is decentralization and federation. It is a good thing

It doesn’t require much resources. You can run on a 5$ linode.