- cross-posted to:

- technology@lemmy.ml

- cross-posted to:

- technology@lemmy.ml

I was taught how punch cards work and that databases used direct disk access. In 1990.

In college (1995) we learned Cobol and Assembler. And Pre-Object oriented Ada (closer to early pascal than anything I can see on wiki today). C was the ‘new thing’ that was on the machines but we weren’t allowed to use.

The curriculum has always been 20 years behind reality, especially in tech. Lecturers teach what they learned, not what is current. If you want to keep up you teach yourself.

I learned how “object-oriented databases” worked in college. After 20 years of work, I still don’t know if such a thing exists at all. I read books regularly instead.

Wiki says they existed, and may still do… never come across one. I thought mongodb might be one but apparently not.

Eplan, electrical controls layout tool, used an object oriented database as its file format, It still may. I saw recently that they entered a partnership of some sort with SolidWorks, so they’re still kicking.

I’ve used one before. Maglev is a ruby runtime built atop GemStone/S, which is an object db. Gives Ruby some distributed powers, like BEAM languages (Elixir and Erlang) have.

Practically all it meant was you didn’t have to worry about serializing ruby objects to store them in your datastore, and they could be distributed across many systems. You didn’t have to use message buses and the like. It worked, but not as well as you’d hope.

Amusingly, BEAM languages, have access to tools a lot like oodbmses right out of the box. ets, dets, and mnesia loosely fit the definition of an oodb. BEAM is functional and doesn’t have objects at all, so the comparisons can be a tad strained.

Postgres also loosely satisfies the definition, with jsonb columns having first class query support.

I had to take a COBOL class in early 2000s. And one of the two C/C++ courses was 90% talking about programming and taking quizzes about data types and what do functions do, and 10% making things just beyond “hello world.” And I’m still paying the student loans.

Im currently studying Cybersecurity and I can speak positively about that. We’re taught C and Java in the programming course (java is still ew, but C is everywhere and will be everywhere). I know a course of two friends got taught Rust (I learned it at work, it’s great).

The crypto we learn is current stuff, except no EdDSA or Post Quantum stuff.

A course in college had an assignment which required Ada, this was 3 years ago.

If it was something like a language theory class, that’s perfectly valid. Honestly, university should be teaching heavily about various language paradigms and less about specific languages. Learning languages is easy if you know a similar language already. And you will always have to do it. For my past jobs, I’ve had to learn Scala, C#, Go, and several domain specific or niche languages. All of them were easy to learn because my university taught my the general concepts and similar languages.

The most debatable language I ever learned in university was Prolog. For so long, I questioned if I would ever have a practical usage for that, but then I actually did, because I had to use Rego for my work (which is more similar to prolog than any other language I know).

What everyone would LIKE to learn is the exact skill that’s going to be rare and in high demand the second right after you graduate. But usually what’s rare and in high demand is also new, and there are no qualified teachers for it. Anyone who knows how to do the hot new thing is making bank doing it just like all the college grads want to do. My advice is to get out of college and then spend the next four working years learning as much as you can. You’re not going to hit the jackpot as a recent grad. You’re maybe going to get in the door as a recent grad.

In my university we learned that we should learn to learn.

It has proven to be very useful.

I got an Electronics Engineering Degree almost 30 years ago (and, funnilly enough, I then made my career as a software engineer and I barelly used the EE stuff professionally except when I was working in high-performance computing, but that’s a side note) and back then one of my software development teachers told us “Every 5 years half of what you know becomes worthless”.

All these years later, from my experience he was maybe being a little optimist.

Programming is something you can learn by yourself (by the time I went to Uni I was already coding stuff in Assembly purelly for fun and whilst EE did have programming classes they added to maybe 5% of the whole thing, though nowadays with embedded systems its probably more), but the most important things that Uni taught me were the foundational knowledge (maths, principles of engineering, principles of design) and how to learn, and those have served me well to keep up with the whole loss of relevance of half I know every 5 years, sometimes in unexpect ways like how obscure details of microprocessor design I learned there being usefull when designing high performance systems, the rationalle for past designs speeding up my learning of new things purelly because why stuff is designed the way it is, is still the same, and even Trignometry turning out to be essential decades later for doing game development.

So even in a fast changing field like Software Engineering a Degree does make a huge difference, not from memorizing bleeding edge knowledge but from the foundational knowledge you get, the understanding of the tought processes behind designing things and the learning to learn.

Also a software engineer… I look back on my undergrad fondly but was it really that helpful? … nah.

I also put no stock in learning how to learn. If people want to learn something they do, if they don’t, they don’t. Nobody has to go to school to fish, play video games, or be a car guy, but all of those things have crazy high ceilings of knowledge and know how.

If you go into an industry you’re not interested in, it doesn’t matter how well you learned to learn, you’re not going to learn anything more than required. For me, I’m constantly learning things from blogs, debates, and questions I find myself asking both for personal projects and professional projects.

Really all a university is, is a guided study of what’s believed to be the foundational material in a field + study of a number of things that are aimed at increasing awareness across the board; that’s going to be more helpful to some than others.

If you graduate and work in a bunch of Python web code … those fundamentals aren’t really that important. You’re not going to write quick sort of bubble sort, very few people do, you’re going to just call

.sort().You’re also probably not going to care about Big-O, you’re probably just going to notice organically “hey this is really bad and I can rearrange it to cache the results.” A bunch of stuff like that will probably come up that you’ll never even pay any mind to because the size of N is never large enough for it to matter in your application.

… personally I think our education system needs to be redone from the ground up. It creates way more stress than it justifies. The focus should be on teaching people important lessons that they can actually remember into adulthood, not cramming brains with an impossible amount of very specific information under the threat of otherwise living a “subpar” life.

Older societies I think had it right with their story form lessons, songs, etc. They made the important lessons cultural pieces and latched on to techniques that actually help people remember instead of just giving them the information with a technique to remember it and then being surprised when a huge portion of the class can’t remember.

Edit: To make a software metaphor, we’ve in effect decided as a society to use inefficient software learn functions driven by the prefrontal cortex vs making use of much more efficient intrinsics built into the body by millions of years of evolution to facilitate learning. We’re running bubble sort to power our education system.

this, and also nothing is 100% new - knowledge in similar areas will always help

because of AI

Oh look, a bullshit article.

You need to learn the fundamentals of how things work, and how to apply those fundamentals, not rote specifics of a particular technology.

I didn’t know the laws of physics were getting obselete. God dammit

Man the medical are going flip when uterus 2.0 goes live

Crypto and AI have rewritten the book on the laws of physics. Now you can defy gravity with AI!

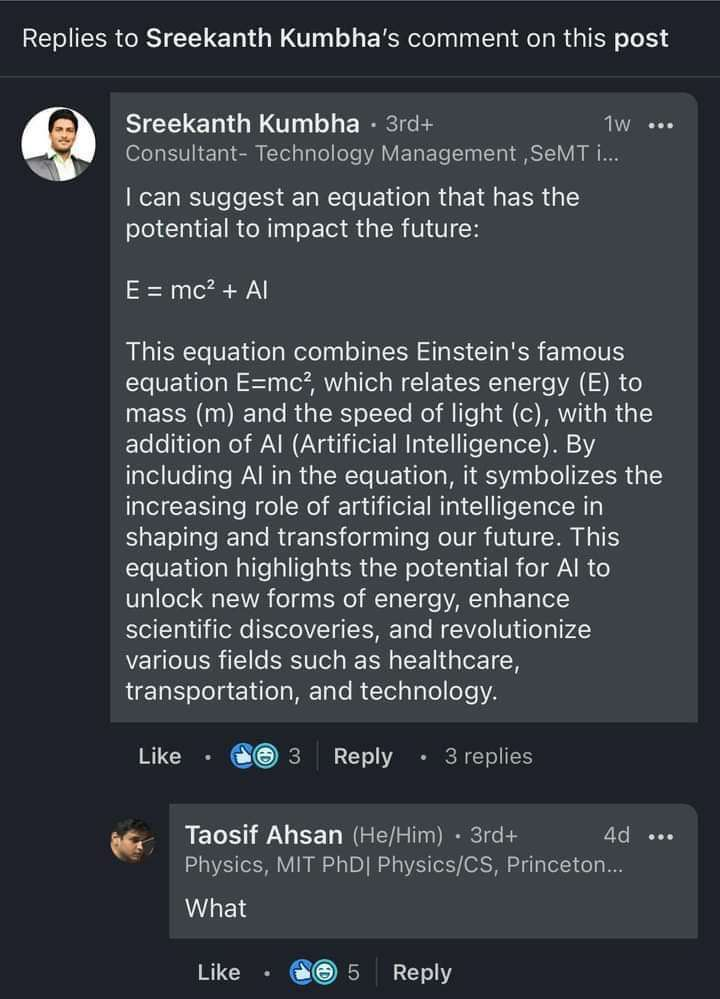

Meme

Text version of meme

A linkedin post by Technology Management Consultant Sreekanth Kumbha that reads: "I can suggest an equation that has the potential to impact the future:

E = mc2 + AI

This equation combines Einstein’s famous equation E=mc2, which relates energy (E) to mass (m) and the speed of light ©, with the addition of AI (Artificial Intelligence). By including AI in the equation, it symbolizes the increasing role of artificial intelligence in shaping and transforming our future. This equation highlights the potential for AI to unlock new forms of energy, enhance scientific discoveries, and revolutionize various fields such as healthcare, transporation, and technology."

MIT PhD in Physics and CS Taosif Ahsan replies: “What”

Tell that to 2/3rds of JS/Node devs 😬

Fundamentals,what are those?

Mostly bullshit because the ultimate goal of college isn’t to make you term basic facts which you need to graduate, instead the ultimate goal is to teach you how and where to learn about new developments in your field or where to look up Information which you don’t know or don’t remember.

Yes, but the how and where to learn are changing too, which is the problem

My answer is always in books. I don’t really know what changed.

I mean if your answer is always books, then it would make sense that one might not be aware of change. It takes a long time to create a book and things are changing at a much more rapid pace, so your learnt “skill” is no longer relevant in todays job market even though it may be new to you

Every technology or framework is documented somewhere, whether it’s in a book or in its documentation. Once you have the basics, you can update your own knowledge with more books or docs.

I don’t know of any piece of technology that is changing so fast that it’s not documented anywhere. At most you have ReactJS which is a weird usage of JavaScript, but you still can find the docs and learn. Or maybe Unity VS Godot but we’re talking about long term education, not framework fights.

Godot is pretty well documented, idk what you’re talking about.

I never said that it lacked documentation. I said there is a more universal underlying theory that you can learn and which hasn’t changed in years.

Yeah, when I was in school, concerning hi-tech&tech we learned stuff that were already obsolete.

This has to be the stupidest AI take yet.

Was learning to do math made “obsolete” by calculators?

One thing I found especially dumb is this:

Jobs that require driving skills, like truck and taxi drivers, as well as jobs in the sanitation and beauty industries, are least likely to be exposed to AI, the Indeed research said.

Let’s ignore the dumb shit Tesla is doing. We already see self-driving taxis on the streets. California allows self-driving trucks already, and truck drivers are worried enough to petition California to stop it.

Both of those involve AI - just not generative AI. What kind of so-called “research” has declared 2 jobs “safe” that definitely aren’t?

I mean, to some degree … yes. Day to day, I do very little math … if it’s trivial I do it in my head if it’s more than a few digits, I just ask a calculator… because I always have one and it’s not going to forget to carry the 1 or w/e.

Long division, I’ve totally forgotten.

Basic algebra, yeah I still use that.

Trig? Nah. Calc? Nah.

You’re not going to college level math to do basic calculations. You’re going to college level math because you need to learn how to actually fully understand and apply mathematical concepts.

I hear this all the time that there’s some profound mathematical concept that I had to go to college to learn … what exactly is that lesson? What math lessons have changed your life specifically?

Also the comment I was replying to was about doing math. Mathematical “concepts” aren’t exactly “doing math.”

Calculus was a game changer. Combined with physics it completely changed my view of the world.

In what way?

It’s hard to explain. Calculus was integral (hah!) to understanding a lot of physics and allowed me to understand better how we know about our world.

For example - just being able to better understand the equations of motion was eye-opening. There were velocity and acceleration equations we’d learned previously but now I understood how we knew them. I could also now derive a function for change in acceleration (aka jerk)!

Trig had a similar impact though that is taught at a high school level not college. The two combined are quite useful for programming things with motion.

Honestly, if you have to ask, you’ll never know. You’re just not emotionally invested in the real world and if you were, you’d see the value of high-level math everywhere. But you don’t, and that’s entirely your fault. No one is going to inspire you for you.

So you’re still doing math then. And using a calculator as a tool to assist you.

Just like we’ll be doing with AI.

Learning professional skills? In college? My guy, that’s not what it’s about. Especially at universities, it’s not about learning professional skills as much as it is networking and earning a piece of paper that proves you can commit to something.

E.g. my university was still teaching the 1998 version of C++ in the late 2010’s. First job I had out of school used C++ 17. Was I fucked? No, because the languages I learned were far less important than how I learned to learn them.

I think the real issue is with schooling before college, and this article seems to be looking at college as the same sort of environment as the previous 12 years of school, which it isn’t. So much of everything through high school has become about putting pressure on teachers to hit good grades and graduating student percentages that actually teaching kids how to learn and how to collaborate with others has become a tertiary goal to simply having them regurgitate information on the tests to hit those 2 metrics.

I have taught myself a number of things on a wide range of subjects (from art to 3d printing to car maintenance and more. City planning and architecture are my current subjects of interest) and I’ve always said when people ask about learning all this stuff that I love to learn new things, despite the school system trying to beat it out of me. I dropped out of college despite loving my teachers and the college itself both because I didn’t like my major (the school was more like a trade school, we chose our majors before we even got to the college) and because I had never learned how to learn in the previous 12 years of school. I learned how to hold information just long enough to spit it out on the test and then forget it for the next set for the next test. Actually learning how to find information and internalize it through experience came after I left school.

GPT is not equipped to care about whether the things it says are true. It does not have the ability to form hypotheses and test them against the real world. All it can do is read online books and Wikipedia faster than you can, and try to predict what text another writer would have written in answer to your question.

If you want to know how to raise chickens, it can give you a summary of texts on the subject. However, it cannot convey to you an intuitive understanding of how your chickens are doing. It cannot evaluate for you whether your chicken coop is adequate to keep your local foxes or cats from attacking your hens.

Moreover, it cannot convey to you all the tacit knowledge that a person with a dozen years of experience raising chickens will have. Tacit knowledge, by definition, is not written down; and so it is not accessible to a text transformer.

And even more so, it cannot convey the wisdom or judgment that tells you when you need help.

Joke’s on you, the stuff that my college tried to teach me was obsolete a decade before I was even born thanks to tenured professors who never updated their curriculum. Thank fuck I live in the Internet age.

This is such a shit and out of touch article, OP. Why bring us this crap?

Claiming modern day students face an unprecedentedly tumultuous technological environment only shows a bad grasp of history. LLMs are cool and all, but just think about the postwar period where you got the first semiconductor devices, jet travel, mass use of antibiotics, container shipping, etc etc all within a few years. Economists have argued that the pace of technological progress, if anything, has slowed over time.

I don’t think that latter statement is right,and if you’ve got some papers I’d love to read them. I’ve never heard an economist argue that. I have heard them argue that productivity improvement is declining despite technological growth though, more that it’s decoupling from underlying technology.

Robert Gordon and Tyler Cowen are two economists who have written about the topic. Gordon’s writings have been based on a very long and careful analysis, and has influenced and been cited by people like Paul Krugman. Cowen’s stuff is aimed at a more non-academic audience. You should be able to use that as a starting point for your search.

In theory, you go to college to learn how to think about really hard ideas and master really hard concepts, to argue for them honestly, to learn how to critically evaluate ideas.

Trade schools and apprenticeships are where you want to go if you want to be taught a corpus of immediately useful skills.

deleted by creator

This has arguably always been the case. A century ago, it could take years to get something published and into a book form such that it could be taught, and even then it could take an expert to interpret it to a layperson.

Today, the expert can not only share their research, they can do interviews and make tiktok videos about a topic before their research has been published. If it’s valuable, 500 news outlets will write clickbait, and students can do a report on it within a week of it happening.

A decent education isn’t about teaching you the specifics of some process or even necessarily the state-of-the-art, it’s about teaching you how to learn and adapt. How to deal with people to get things accomplished. How to find and validate resources to learn something. Great professors at research institutions will teach you not only the state-of-the-art, but the opportunities for 10 years into the future because they know what the important questions are.

Ultimately we’re running into the limiting factor being uptake. It’s not going to be a factor of how quickly new techniques get turned out, but rather how quickly they can be effectively applied by the available communities. It’s going to start with the new tech being overhyped and less than savvy managers demanding that the new product has X as a feature, and VCs running at it because of FOMO, and then the gap between promise and capability will run straight into the fact that no one knows what they’re doing with the new tech, especially when it comes to integrating with existing offerings.

It’s going to cause a demand shock.

What do you mean by ‘obsolete’?

I mean, that’s really only true for compsci. While scientific and technological advances will indeed be made in STEM in general, they aren’t that fast or significant enough to make what was learned unviable.

Not even though. The things I learned about in my bachelor’s and master’s didn’t suddenly get mase obsolete.

I’d like to see the innovation that makes algorithm theory obsolete.

Fair. I was thinking more about changes in coding language usage, but I suppose that also depended on when you were attending university. There have been periods where things changed faster in compsci than other periods.

The basic algorithms and mathematics are still the same tho, maybe the implementations are going to be different on 5 years from today, but there’s not going to be a revolution on mathematics in 5 years that makes the teaching of calculus useless.