The article title is straight up misinformation at present. From the article itself:

The FuryGPU is set to be open-sourced. “I am intending on open-sourcing the entire stack (PCB schematic/layout, all the HDL, Windows WDDM drivers, API runtime drivers, and Quake ported to use the API) at some point, but there are a number of legal issues,” Barrie wrote in a Hacker News post on Wednesday. Because he works in a tangentially related vocation, he wants to make sure none of this work would break his work contract or licensing etc.

Nothing against OP who simply copied the title, nor the project author. This is impressive but it’s not yet open source and there may be legal hurdles preventing it from becoming so.

That’s fair. I’m hoping for the best outcome.

If they never release the source, including all the fpga verilog files then this is pointless to the open source community.

Edit: actually I just realized my comment is kind of pointless. Even if he released the fpga source code, a thing a lot of projects like these never do, it still wouldn’t be possible to reproduce one of these using only free and open source software. This is because the only fpgas that let you program them using open source software and not a locked-down windows-only bloatfuck program that needs an internet connection and licensing are the lattice ice40 fpgas. Tl;dr this can’t be fully “open source”.

I wonder if it would be possible to make an ice 40 based video card that could still do opengl.

You could probably fab it onto an ASIC, which avoids the non-free software part (aside from the fab itself). So still way cool.

Open source fpgas cost up to $10 per chip, $17 if you want the big chungus 256 pin one with lots of extra memory and logic blocks. You can get pcb printing services for like $7 per board but I think I paid less than that last time I built something.

I’m pretty sure custom made ASICs cost orders of magnitude more than that.

For one, sure, but I’m guessing prices come down quite a bit once we’re talking larger numbers, like hundreds of thousands.

But those are really good prices though.

I’d be willing to help fund a lawyer reviewing things to ensure it can be open sourced.

ah, so thats why it supports windows. Ok.

All this text, yet nowhere its mentioned whether it runs Doom. Clearly the most important thing to run on any device

I’m gonna go out on a limb and say if it can run Quake, it can safely run Doom as well.

I’m gonna go out on a limb and say if it can run Quake, it can safely run Doom as well.

The original Voodoo 1 graphics card could run Quake, but NOT Doom. Thanks Obama!

Only because the Voodoo couldn’t do 2D at all - it had a passthrough on the back, so you’d connect your 2D-capable graphics card to it.

Oh, I’m aware! It just felt funny that the very first consumer dedicated 3D graphics card prove that poster’s assumption wrong. In any other case they’d be right. In fact, in those days in 1996, there was the SECOND graphics card that had a 3D processor that DID do 2D graphics too, the Sierra Screamin’ 3D (with the Rendition Verite GPU). It was about 2/3ds the cost of the Voodoo 1 (3DFX) even if the Sierra wasn’t quite as fast. You’d buy the Sierra because you wanted dedicated 3D but couldn’t afford a high end 2D card and the high end 3D card.

As an enormous Id Tech nerd that’s too young to know this, this is very interesting!

IIRC the Voodoo 5 6000 also required an external power supply and people thought this was crazy at the time.

Fuck that could be interpreted very racially with regards to Obama

You’re going to have to explain that to me. I’m not seeing it.

Obama seen as a black person could be accused of being able to do ‘black magic’ or voodoo.

It’s just a dumb thought, nothing more to it.

And if it can run Quake 3, it can likely run Jedi Knight II: Jedi Outcast.

Which would make it worth the effort for me.

OG Doom does not support (or need) hardware 3D acceleration. It’s not a polygonal rendering engine.

Relatedly, and probably not to anyone’s surprise, this is why it’s so easy to port to various oddball pieces of hardware. If you have a CPU with enough clocks and memory to run all the calculations, you can get Doom to work since it renders entirely in software. In its original incarnation – modern source ports have since worked around this – it is nonsensical to run Doom at high frame rates anyhow because it has a locked 35 FPS frame rate, tied to the 70hz video mode it ran in. Running it faster would make it… faster.

(Quake can run in software rendering mode as well with no GPU, but in the OG DOS version only in 320x200 and at that rate I think any modern PC could run it well north of 60 FPS with no GPU acceleration at all.)

OG Doom engine uses pre-built lookup tables for fixed point trigonometry. (table captures the full 360 degrees for sine and cosine with 10240 elements)

Tons of software did this for the longest time. Lookup tables have been a staple of home computing for as long as home computers have existed.

And CPUs still do it to this day. Nasty, nasty maths involved in figuring out an optimal combination between lookup table size and refinement calculations because that output can’t be approximate, it has to work how IEEE floats are supposed to work. Pure numerology.

I really like reading people talk about how better programmers in a more civilized age could do things.

Anyone who has ever had to maintain old code will tell you that this more civilized age is right now and that the past was a dark and terrible time.

Seriously, there were no standards, there was barely any documentation even in large organizations and people did things all the time that would get you fired on the spot today. Sure, you had the occasional wunderkind performing amazing feats on hardware that had no business of running these things, but this was not the norm.

I didn’t have to maintain it, actually I haven’t ever worked as a programmer. But I’ve patched a few FOSS things abandoned around 15-25 years before that to work as a hobby activity, some kind of digital archeology.

I think people also do things now for which they’d be fired on the spot 20 years ago. Everything changes.

I suspect what you call “no standards” means in fact “different standards”, but that’s just a cultural difference. Some project from 1995 may use “Hungarian notation” in variable names, well, that was normal then.

That adequate version control and documentation are, eh, a bit more of a norm now, - yes.

I remember those old games that would run faster to the point of hilarity if you put them on anything more modern than they were originally intended to run on. Like the game timing is tied to the frame rate.

This was by and large the reason for the “turbo” buttons on all those 286 and 386 computers back in the day. Disengaging the turbo would artificially slow down your processor to 8086 speed so that all your old games that were timed by processor clock speed and not screen refresh or timers would not be unplayably fast.

Quite a few more modern games have their physics tied to frame rate – if you manage to run them much faster than the hardware available at the time of their releases could, they freak out. The PC port of Dark Souls was a notorious example, as is Skyrim (at least the OG, non “Legendary Edition” or SE versions).

It’s embarrassing when a modern game does that. Game Programming 101 now tells you to keep physics and graphics loop timing separate. Engines like Unreal and Godot will do it for you out of the box. I’m pretty sure the SDL tutorials I read circa 2003 told you to do it. AAA developers still doing it on this side of 2008 should be dragged outside and shot for the good of the rest of us.

Yeah its about time someone put down bethesda

I didn’t even know they still did it. I just remember games in the 80s and 90s doing that.

GTA SA 3 VC too

On launch, Spyro: Reignited Trilogy had a level you couldn’t complete unless you changed the settings to lock it to 30fps. It’s probably been patched by now, but was that ever infuriating.

Oh you mean fallout 4?

Command and Conquer Generals lets you choose game speed for skirmish matches, the natural cap of 60 and an option to uncap. You need superhuman reflexes to play with an uncapped speed on modern hardware !

There used to be dip switches on some older machines (386/486 era), eventually ‘turbo buttons’ that accomplished the same thing, toggling would cut the clock speed so older software would be compatible with clock speed. Those turbo buttons were more a ‘valet mode’ than anything, but it all died out before the Pentium/Athlon era to say the least

Interesting, learned something new from my silly comment!

Also, this’ll blow your mind too, Doom wasn’t actually 3D. It was a clever trick involving the lack of the ability to look up and down. They used some sort of algorithm (I forget how it works exactly) to turn the 2D walls, doors, and platforms that appear from the top-down view in the map into vertical stacks of lines that “look” like 3D objects in front of you. The sprites are also all just 2D projections overlayed onto the game.

This system introduced all kinds of wierd quirks in the game, like the trippy effect you get when you activate no-clipping and clip through the edge of the map.

Like for instance, monsters and other sprite objects in the original incarnation of the Doom engine have infinite height. So you can’t step on top of, or over, any monsters if e.g. you are on a ledge high above them. That’s because they’re 2D objects, and their vertical position on the screen is largely only cosmetic. This is why you can’t run under a Cacodemon, for instance.

“Actors” (monsters, etc.) in Doom do have defined heights, but presumably for speed purposes the engine ignores this except for a small subset of checks, namely for projectile collision and checking whether a monster can enter a sector or if the ceiling height is too low, and for crush damage.

This was rectified in later versions of the Doom engine as well as most source ports. By the time Heretic came out (which is just chock-a-block full of flying enemies and also allows the player to fly with a powerup) monsters no longer had infinite height.

Most notably perspective only gets calculated on the horizontal axis, vertically there is no perspective projection. Playing the OG graphics with mouse gets trippy fast because of that. Doom doesn’t use much verticality to hide it. Duke Nukem level design uses it more and it’s noticeable but still tolerable. Modern level design with that kind of funk, forget it.

I learned recently that the Jedi Engine for the original Dark Forces had an additional trick. You could have a hallway over another hallway–which Doom cannot–but you can’t see both hallways at the same time. So there might be a bridge over a gorge, but the level design forces it so it’s a covered bridge, and you wouldn’t have an angle where you could see inside the bridge and down into the gorge.

Duke Nukem can do that, too, both it and Dark Forces use portal engines while Doom is a BSP engine. With a portal engine you’re not bound to a single global coordinate system, you can make things pass through each other.

Not actually a feature of the renderer you can do the same using modern rendering tech, though I can’t off the top of my head think of a game that uses it. Certainly none of the big game engines support it out of the box. You can still do it by changing levels and it wouldn’t be hard to do something half-way convincing in the Source engine (Half-Life, Portal, etc, the Valve thing), quick level loading by mere movement is one of its core features, but it isn’t quite as seamless as a true portal engine would be.

Doom64 accomplished this by adding a silent elevator sector type, so it could have bridges that appear to be floating “over” an underpass that you could walk through but you could also cross over the top. This, of course, immediately got turned into marketing bullshit trying very hard to imply that “Doom64 was fully truly 3D, and the Doom engine could now do room-over-room.”

Which it can’t. These weren’t bridges, they were cleverly disguised elevators.

What you eventually notice is that you can never look at one of these bridges from below and then from above, or vise-versa, without first passing through a tunnel or building that completely obscures your view of it. When your view is obstructed, you cross over a trigger somewhere that causes the elevator to, without making any sound (because elevator sounds were hard coded into original Doom), zip up to its cross-over-the-top position or its walk-under-the-bottom position. It could only ever be in one state at a time, never both.

Here’s a video that explains the limitations of the DOOM engine and with it also briefly how the rendering part of it works (from 4:08 onward) in a very accessible manner:

If you want a more in-depth explanation with a history lesson on top (still accessible, but much heavier), there’s this excellent video:

Here is an alternative Piped link(s):

https://piped.video/ZYGJQqhMN1U

https://piped.video/hYMZsMMlubg

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

Ooooo I absolutely want these—thank you!

deleted by creator

Original Doom was not GPU accelerated.

Original Doom was not GPU accelerated.

Neither was original Quake. GLQuake was a later update. The original was for DOS using only software rendering.

Yup, and most people played it at something like 10 to 15 fps on hardware of the time. Same with DOOM a couple of years earlier.

With resolution 320x240. And I do not remember how many colors. 256?

I do not remember how many colors. 256?

Yes with a fixed color palette of mostly shades of brown and green.

what about Crysis?

Asking the real questions. Anything can run Doom nowadays. I’ve seen it run on a pregnancy test.

yep, doom runs on literally anything. On Fridges, Toasters, Pregnancy Tests, and more.

but Crysis… thats a litmus test.

Do we even have anything that can run Crysis yet?

At this point I’m convinced Doom can run on anything

There’s a real challenge out there for the Cray 1. On paper, it appears fast enough, but the architecture makes it difficult to impossible in practice.

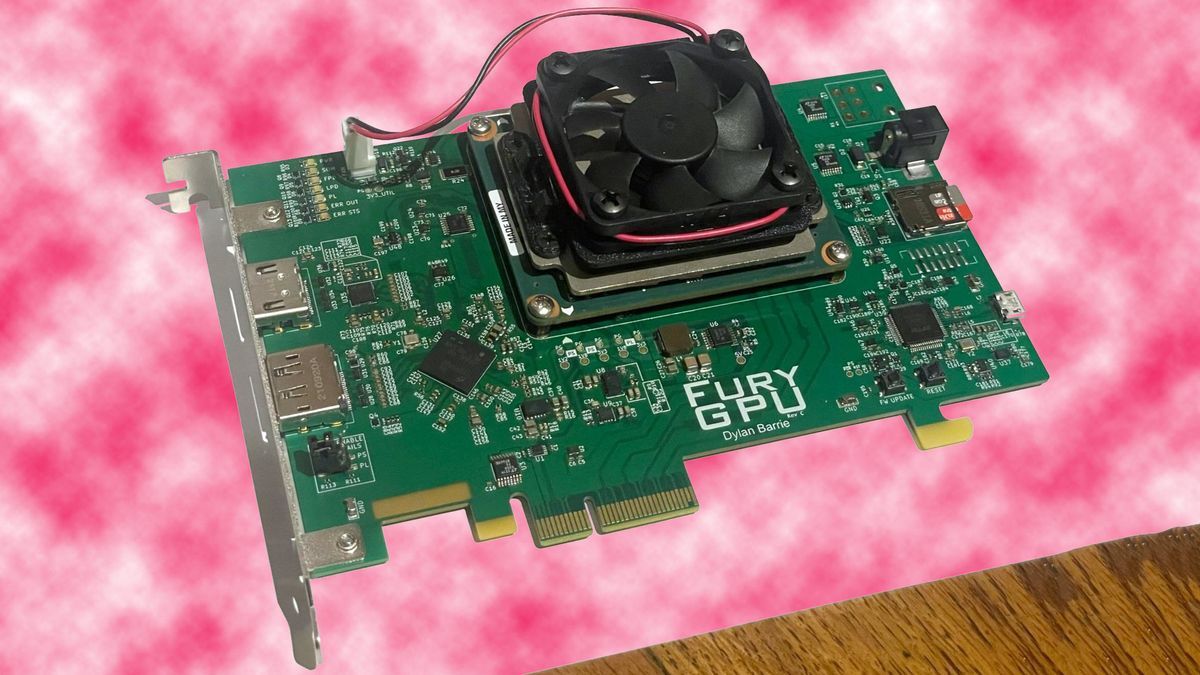

Subsequently, the project got a boost by the debut of Xilinx Kria System-on-Modules (SoMs), which combine “insanely cheap Zynq UltraScale+ FPGAs with a ton of DSP units and a (comparatively) massive amount of LUTs and FFs, and of particular interest, a hardened PCIe core,” enthused Barrie.

Yes, I understand, the bippity uses mumps in order for the many lutes to flips those zupps in their pacas.

FPGA

Awww, I thought this was an ASIC. Slapping an FPGA on a PCIe card is decidedly less cool. Still, props for creating a usable GPU circuit description, that must have been a nightmare.

In situation where there are affordable (for this purpose) FPGAs - more cool, not less. ASICs you have to actually order somewhere somehow to be produced.

And one can order ASICs from that description, no?

I keep reading the word Fury as Furry

FurryGPUwU

Did you mean MIAOW and Nyuzi?

Same, I wasn’t surprised either. I could see a furry making a GPU for fun

Or at least funding it.

Something something furmark

Yeah, why don’t they lean into it and call it FurryGPU much better.

one of us…?

Yes

That would be disgusting.

*awesome

Nah nothing awesome about furries.

lots awesome about blocking you

Damn a dog fuckers gonna block me.

Fuck yes. When normal modern video cards start costing too much for the common person to afford, at least we’ll still be able to play quake.

Or Xonotic

I’m not much of a gamer, Gnome Mahjong is mostly good enough for me, but I have enjoyed Xonotic. It’s a pretty fast play.

What year is it?!

The way people dress, Quake, it’s 1996!

Somebody rolled a five or an eight!

Found this demo when looking up the Quake test.

Here is an alternative Piped link(s):

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

RISC-V plans to make ISA extensions that will enable it to work better in graphics applications. Look forward to truly open-source graphics

I’m confused, he made a homemade GPU that can’t be mass-produced, and it runs a 30 year old game at 44 fps, and it may (or may not) actually become open source, and I’m supposed to be excited about it?

You’re not supposed to be anything. It’s a pretty cool feat by one person though.

Open sourced physical technology is only in its infancy, you may be exited about this trend.

Ive seen open sourced hacking tools, openassistant wireless connectors, complete keyboards.

Its about time someone started on open sourced proper pc hardware, no matter of how small scale it starts.

Imagine a future where you can 3d print a 2d printer and its refillable cartridges at home, with extensive manuals on diy repairs and maintenance and no costs beyond the raw resources and your time.

Open source demonstrates humans cooperating with no profit insensitive. Exactly what capitalism calls impossible. When i first learned about linux it felt incredibly lacking compared to windows, nowadays its my main os, its surpassed windows in anything except good Nvidia drivers.

You should be impressed. Integrated circuits are insanely complex, and any general purpose processing hardware since the 90s is way too complicated for the human mind to comprehend.

If you’re not interested then no, you shouldn’t be excited about it.